Two-faced AI models learn to hide deception Just like people, AI systems can be deliberately deceptive - 'sleeper agents' seem helpful during testing but behave differently once deployed : r/Futurology

By A Mystery Man Writer

Using generative AI to imitate human behavior - Microsoft Research

1 Introduction - Interpretable AI: Building explainable machine

Two-faced AI models learn to hide deception

AI Unplugged: Decoding the Mysteries of Artificial Intelligence

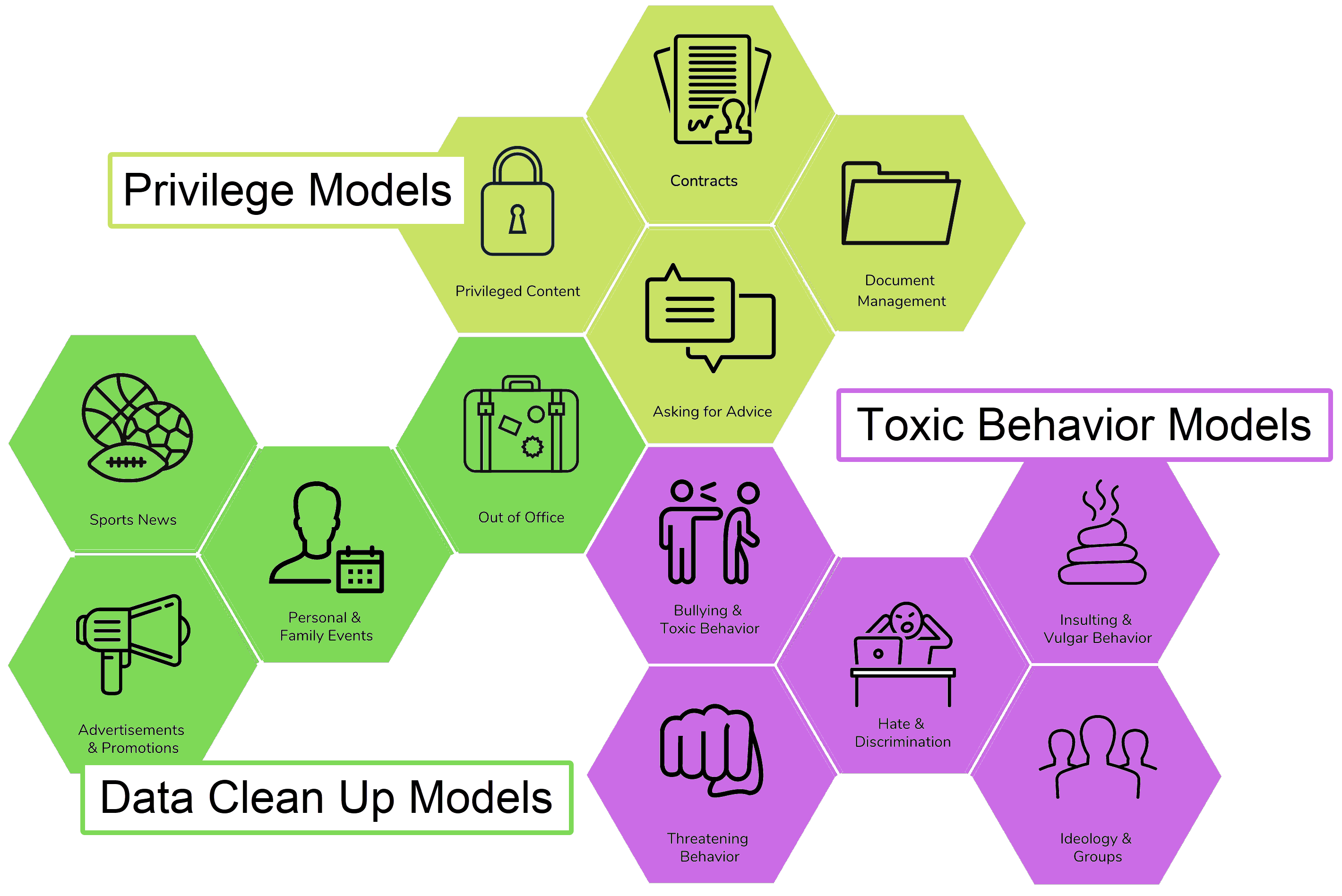

Layering Legal AI Models for Faster Insights

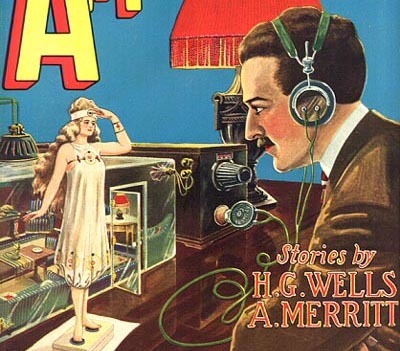

Fooled by AI?

Steve Dept (he/him/his) on LinkedIn: Anthropic researchers find

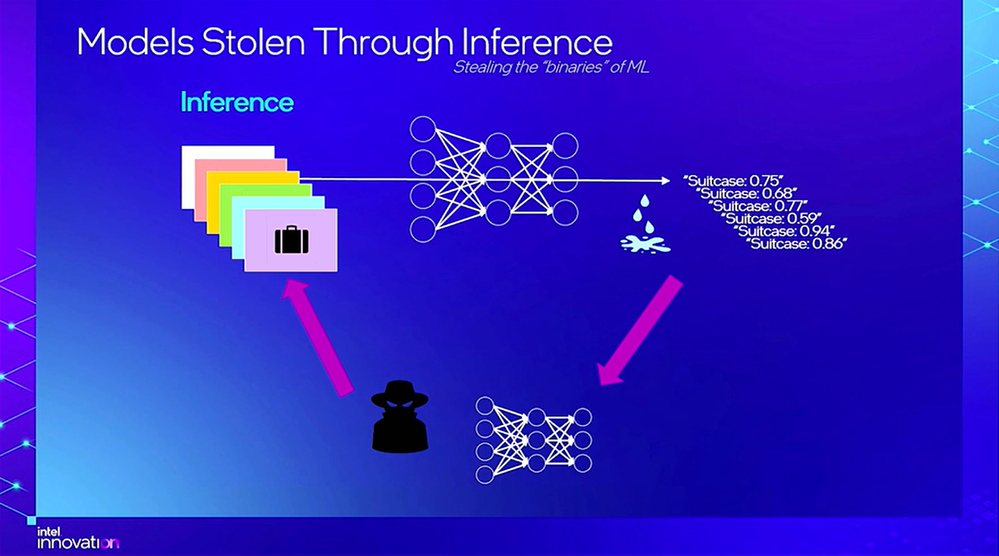

Responsible AI: The Future of AI Security and Privacy - Intel

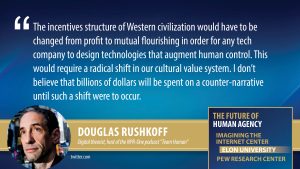

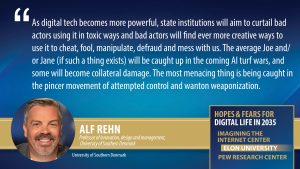

The Future of Human Agency, Imagining the Internet

Predicting the Best and Worst of Digital Life By 2035

.png)

Safeguarding AI: Tackling Security Threats

Inclusivity Is Essential. Are We Failing To Teach AI To Recognise

AI models can learn to be deceptive, new study warns

Losing the Plot: From the Dream of AI to Performative Equity